Building tools for teams: the AI technology behind our automated user experiences

In this article, the third in our “Tools for teams” series, we asked Martin Hovin, Data Scientist at Huddly, to explain how this technology benefit our users, and to tell us more about how we use AI and Machine Learning to make collaboration easy.

At InfoComm19, we introduced Huddly Canvas, our latest AI technology to capture and enhance content on analogue whiteboards in the meeting room. Canvas will be used to create innovative automated experiences for our users, similar to our groundbreaking Genius Framing feature which detects the people in the camera’s field of view and frames them perfectly for the far end.

Huddly is unique in not only the cutting-edge nature of helpful AI features like these, but also the fact that the AI compute takes place on the camera itself, and the speed with which we can develop and ship them to our users. There are three factors that enable this technological leadership:

- Huddly cameras are edge-based AI devices

- They are connected to an upgradeable software platform for the latest releases

- They use Machine Learning-driven improvements

We asked Martin Hovin, Data Scientist at Huddly, to explain how this technology benefits our users, and to tell us more about how we use AI and Machine Learning to make collaboration easy. Thank you as well to Bendik Kvamstad, Senior Data Scientist, for his contributions to this article.

Martin Hovin, Data Scientist at Huddly

Huddly IQ’s AI features

At Huddly, we build tools for teams – powerful products and features that use AI to enable team collaboration and make people’s workday easier. We can separate Huddly’s AI features into 3 categories:

- Huddly Genius features automate previously manual processes, allowing you and your team to focus on collaborating and getting things done.

- Huddly InSights analytics give you a better understanding of how your meeting spaces are being used, so you can make data-driven decisions on meeting room investment and management.

- Huddly Canvas makes it easy to bring analogue whiteboard content into the digital meeting space, supporting the way you work today.

Huddly’s AI features bring teams all sorts of benefits, but what is it about the way we do AI that makes our products and experiences so special?

The Huddly IQ camera is truly a cutting-edge piece of hardware, with many different high-quality components. But for us in the AI teams, the most important one is the Intel Movidius Myriad X chip – that’s where the magic happens. The Myriad X is a VPU (vision processing unit) specially designed for Machine Learning and it enables us to run Genius Features directly on the camera rather than on an attached PC or in the cloud.

Running this AI on the device is called edge computing. It ensures that no information leaves the meeting room except for your conference call, while still providing you with the benefits that come from computationally heavy algorithms, such as Machine Learning. Edge computing enables Huddly IQ to make real-time decisions with lower latency and bandwidth than doing the compute on an attached PC or in the cloud, as well as increased accuracy, privacy, and security.

Furthermore, our cameras are software-defined, a kind of product development methodology which is revolutionary in the video conferencing industry. To give an analogy, let’s take the humble toaster. Imagine if radically innovative, mind-blowing new toaster technology was developed next week. How long would it take for manufacturers to design new toasters with this technology? How long to then start production and begin shipping these newfangled ultra-toasters to customers? Now imagine that instead, the toaster manufacturers only had to change a few lines of software code and send an update to the toaster you already own over-the-air? That’s how we do innovation here at Huddly.

How Huddly IQ uses Machine Learning

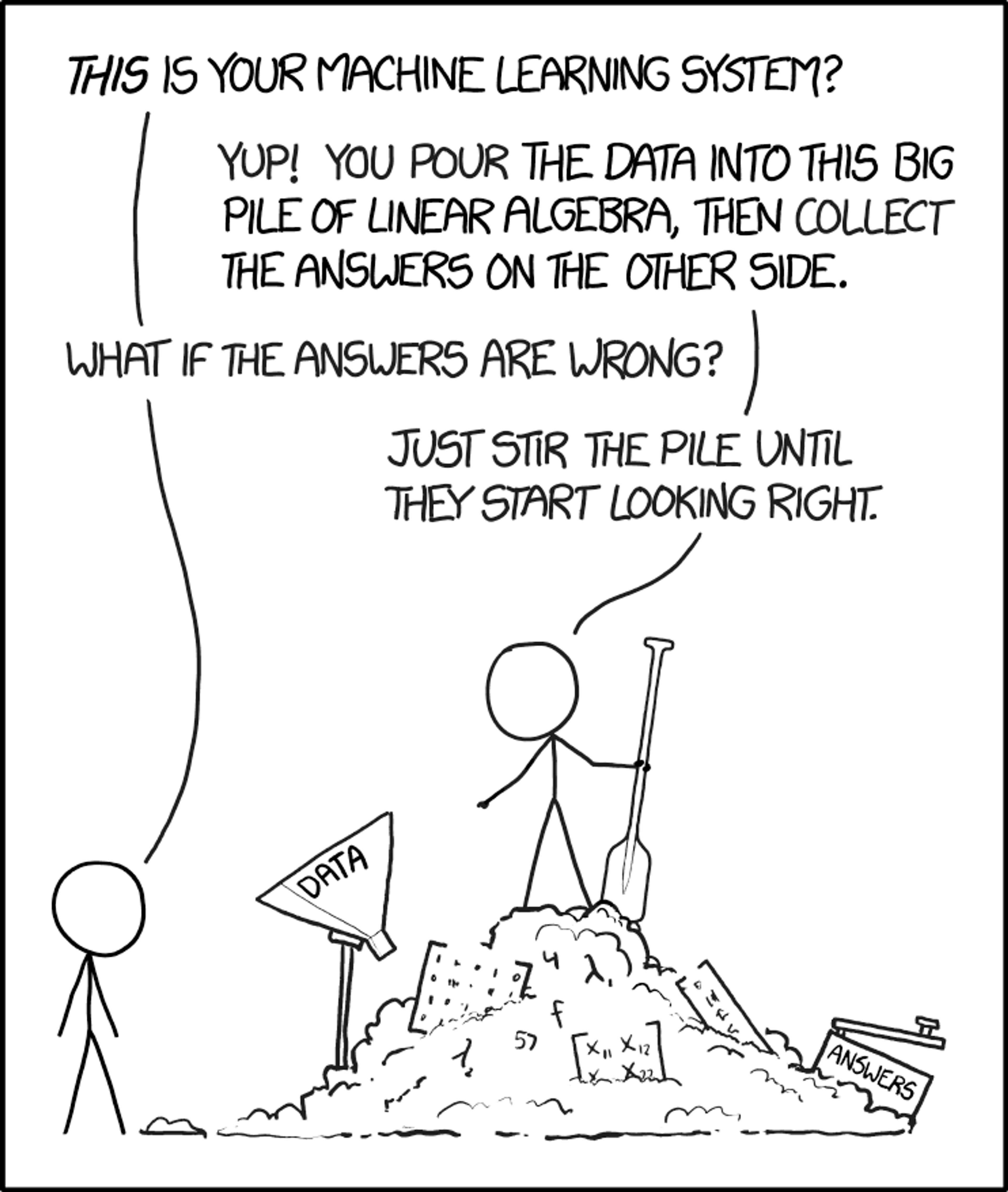

The human brain is a flexible biological structure capable of a virtually endless number of tasks. Machine Learning is the field of study that attempts to emulate some of our brain’s ability to recognize patterns and it is a subset of the larger topic of AI. It’s one of the most exciting and fast-developing fields in computer science, with new techniques and theories being developed every month that have the potential to bring dramatic changes to performance and development.

Huddly IQ uses Machine Learning to deliver great automated user experiences. It goes without saying that there is a lot of nuance in the world of AI but, to put it simply within the context of Huddly IQ, Machine Learning understands the meeting room and the Genius Framing feature then makes decisions based on that understanding. The camera is able to detect the people in the room and frame them in the most ideal way.

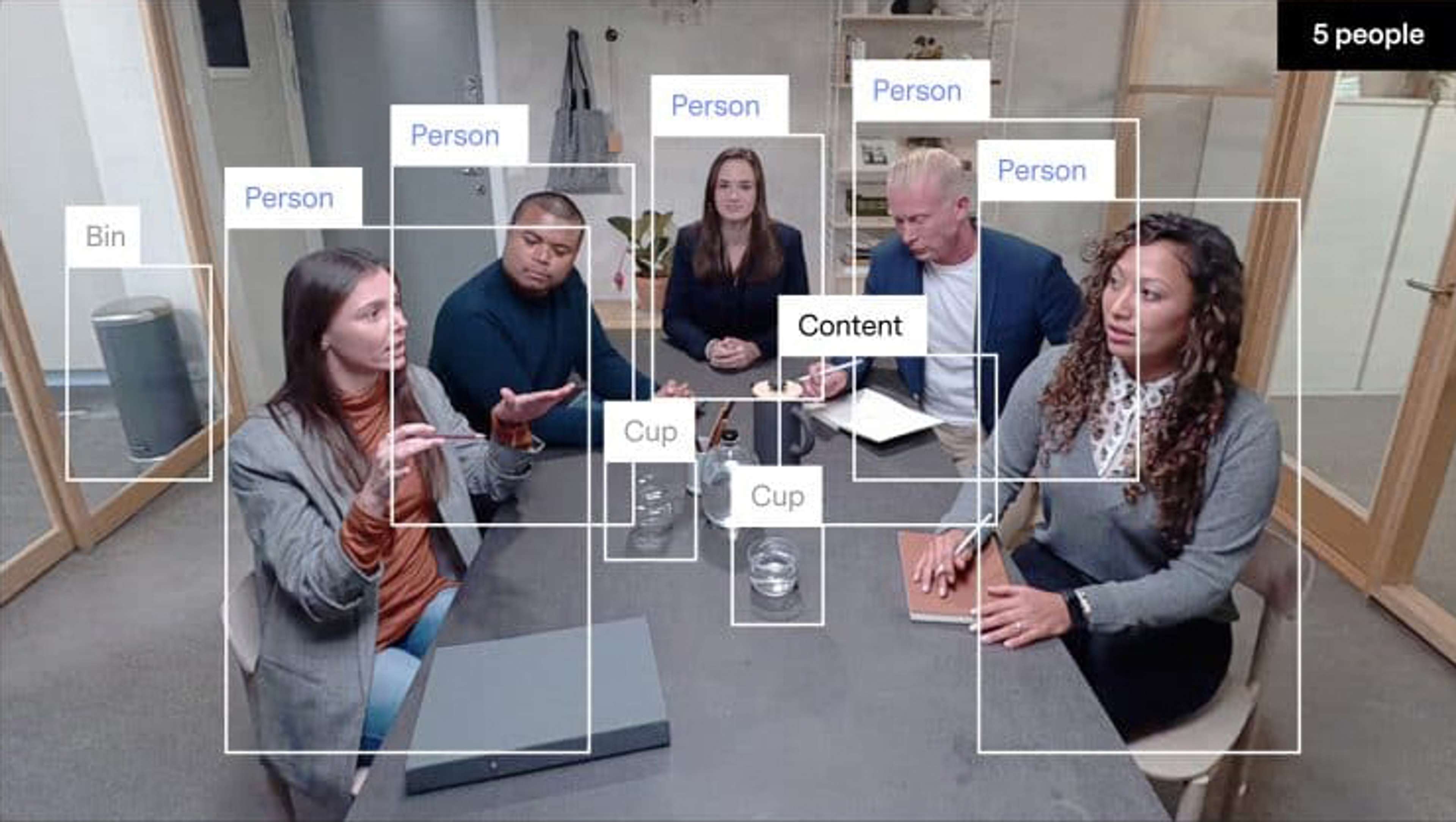

This requires a specific cognitive interpretation of the meeting room, which is provided by the object detection algorithm. This is a Machine Learning technique which consists of both locating objects in an image (localization) and identifying what that object is (classification).

At its core, the object detection algorithm is a bunch of clever math and a set of instructions on how to detect specific objects in an image. For Genius Framing, to train the Huddly IQ to understand a meeting room, we supply it with training data in the form of thousands of images.

What the Genius Framing model can see in a meeting room.

How do we train the camera to understand the meeting room?

Imagine the Machine Learning model as a black box with a hole on each end, one for input and one for output. We feed an image of people in a meeting into one end of the box, and out of the other end comes the box’s – i.e. the model’s – interpretation of the image. Then we compare that interpretation to the correct answer, calculate just how wrong it was and communicate it back to the box (using something called backpropagation).

This process is repeated millions of times, and with each image, the model gradually gets better at understanding where people are located in the room. Before we release it, it is automatically tested on thousands of images and videos so that we can ensure the highest-quality user experience.

Huddly Canvas applies a similar technique to detect and enhance whiteboard content.

After training and testing, the Genius Framing model is able to output the location of people in the conference room. On the front end, this information is used for our Genius Framing feature to find the optimal frame of the meeting participants, while on the back end, it is used by our InSights API for people count, room usage and occupancy status. Huddly IQ will be able to produce more sophisticated data in the future, for both front-end user experiences and back-end analytics.

Through the accompanying SDK, organizations can leverage the utility of the camera as they see fit. The Huddly SDK allows partners, integrators, and developers to control the AI-powered Huddly IQ conference camera and take advantage of its advanced analytics to build their own exciting features and experiences.

The arguments for why our camera is an excellent addition to any workplace is therefore two-fold: the live-in Machine Learning algorithm delivers amazing AI experiences straight out of the box, while the SDK provides advanced analytics which can be used for facility management and more.

As mentioned, the progress in Machine Learning in the previous years is nothing if not jaw-dropping, and the future of AI lies in dedicated hardware like the Myriad X. With such hardware, we can power impressive models from the forefront of development. State of the art is only an update away.